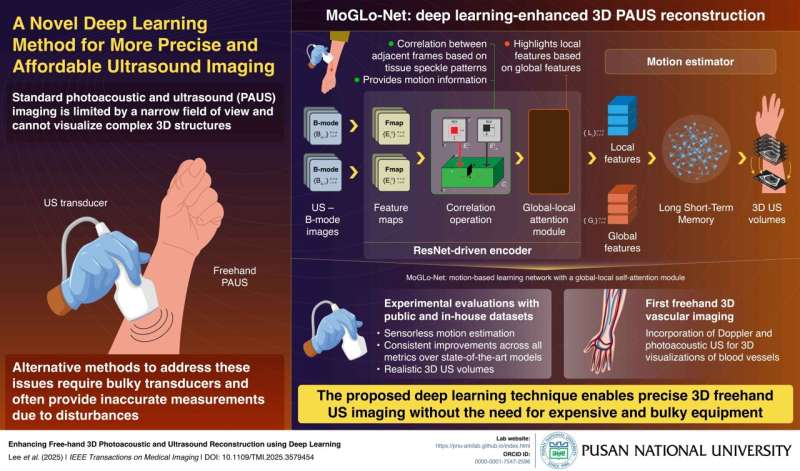

When the US is combined with photoacoustic (PA) imaging, where laser light pulses are used to produce sound waves in tissues, the resulting technique, called PAUS imaging, offers enhanced imaging capabilities.

In PAUS imaging, a doctor holds a transducer, responsible for emitting US or laser pulses, and guides it over the target region. While this configuration is flexible, it captures only a small two-dimensional (2D) area of the target, offering a limited understanding of its three-dimensional (3D) structure. Though some transducers offer complete 3D imaging, they are expensive and have a limited field of view.

An alternative method is the 3D freehand method, in which 2D images scanned (obtained) by sweeping a transducer over the body surface are stitched together to create a 3D view. A key challenge in this technique, however, is the precise tracking of transducer motion, requiring expensive and bulky external sensors that often provide inaccurate measurements.